A Polynomial Subspace Projection Approach for the Detection of Weak Voice Activity

This website will demonstrate the design of polynomial subspace projection approach for the detection fo weak voice activity developed by Vincent W. Neo, Stephan Weiss and Patrick A. Naylor. This is a joint work between the Speech and Audio Processing Lab at Imperial College London and the Department of Electronic and Elctrical Engineering at University of Strathclyde.

About This Work

Anechoic speech signals sampled at 48 kHz are taken from the VCTK corpus [1]. The room impulses responses (RIR) from the source to a 8-channel hearing aid microphone array are taken from the Kayser database in [2].

The noisy and reverberant speech at each microphone is generated by convolving the anechoic speech and the interferer with the room impulse response before adding noise at the desired SNR. The design of the VAD is inspired by the approach presented in [3]. The sequential matrix diagonalisation (SMD) algorithm [4] is used for all PEVD processing.

Demonstration

The VAD output is obtained by applying the binary mask to the microphone 1 signal (frames labelled as presence of target speaker, `1') and the residue signal is obtained by taking the difference (frames labelled as absence of target speaker `0'). The algorithms used in the comparison include

- WebRTC VAD [5]

- Sohn VAD [6]

Audio Examples

The audio player is built using the trackswitch.js tool in [7].

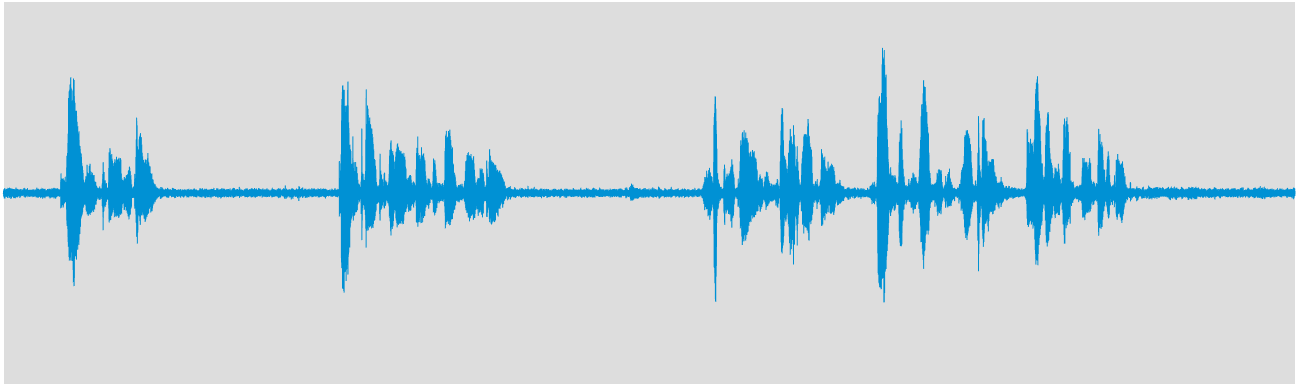

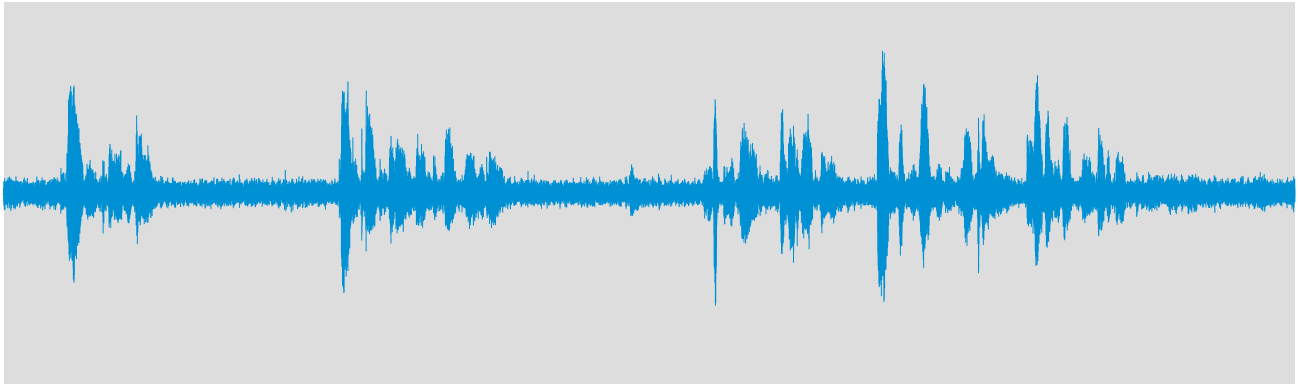

Speech in enclosed room, corrupted by 20 dB directional destroyer noise.

Speech in enclosed room, corrupted by 10 dB directional destroyer noise.

Speech in enclosed room, corrupted by -30 dB directional destroyer noise.

Speech in enclosed room, corrupted by -20 dB directional F16 cockpit noise for long target speech.

Speech in enclosed room, corrupted by -20 dB directional F16 cockpit noise for short target speech.

References

[1] C. Veaux, J. Yamagishi, K. MacDonald, "CSTR VCTK Corpus: English Multi-speaker Corpus for CSTR Voice Cloning Toolkit," The Centre for Speech Technology Research (CSTR), University of Edinburgh, 2017. [Online] Available: https://doi.org/10.7488/ds/1994

[2] H. Kayser, S. D. Ewert, J. Anemüller, T. Rohdenburg, V. Hohmann, and B. Kollmeier, “Database of multichannel in-ear and behind-the-ear head-related and binaural room impulse responses,” EURASIP J. Advances in Signal Process., vol. 2009, no. 298605, pp. 1-110, Jun. 2009.

[3] S. Weiss, C. Delaosa, J. Matthews, I. K. Proudler, and B. A. Jackson, “Detection of weak transient signals using a broadband subspace approach,” in Proc. IEEE Sensor Signal Process. for Defence Conf. (sspd22), 2021.

[4] S. Redif, S. Weiss, and J. G. McWhirter, “Sequential matrix diagonalisation algorithms for polynomial EVD of para-Hermitian matrices,” IEEE Trans. Signal Process., vol. 63, no. 1, pp. 81-89, Jan. 2015.

[5] Google, "WebRTC Voice Activity Detector (VAD)." [Online] Available: https://github.com/wiseman/py-webrtcvad

[6] J. Sohn, N. S. Kim, and W. Sung, “A statistical model-based voice activity detection,” IEEE Signal Process. Lett., vol. 6, no. 1, pp. 1-3, 1999.

Listening examples audio tool

[7] N. Werner, S. Balke, F.-R. Stöter, M. Müller, B. Edler, "trackswitch.js: A Versatile Web-Based Audio Player for Presenting Scientific Results." 3rd web audio conference, London, UK. 2017. [Online]. Available: https://github.com/audiolabs/trackswitch.js

Related Works on PEVD Algorithms

[8] J. G. McWhirter, P. D. Baxter, T. Cooper, S. Redif, and J. Foster, “An EVD algorithm for para-Hermitian polynomial matrices,” IEEE Trans. Signal Process., vol. 55, no. 5, pp. 2158–2169, May 2007.

[9] V. W. Neo and P. A. Naylor, “Second order sequential best rotation algorithm with Householder transformation for polynomial matrix eigenvalue decomposition,” in Proc. IEEE Int. Conf. on Acoust., Speech and Signal Process. (ICASSP), 2019.

[10] S. Redif, S. Weiss, and J. G. McWhirter, “An approximate polynomial matrix eigenvalue decomposition algorithm for para-Hermitian matrices,” in Proc. Intl. Symp. on Signal Process. and Inform. Technology (ISSPIT), 2011, pp. 421–425.